What makes a great qualitative evaluation for assessing wellbeing impact?

As a centre, we have always used both quantitative and qualitative evidence synthesis in our work to understand what works for whom, how, when and for how much.

Through our methods work, we see that qualitative research has been particularly useful in establishing theories of change and early stage evidence bases. So we wanted to be able to answer the question “How do I do the best qualitative study for my project?” – in order to be able to advise those producing primary research, as well as to contribute to the evidence base and more effectively share learnings.

The result, funded by the National Lottery Community Fund, is the paper Quality in Qual: A proposed framework to commission, judge and generate good qualitative evaluation in wellbeing impacts. It is based on interviews with ten leading organisations and individuals who have in-depth evaluation and methodological expertise. It sets out why work in this area is valuable and important, what resources already exist, and identifies six prompts for potential uses of qualitative research in evaluations.

In this blog, author Ruth Puttick summarises the report.

Over the last few months, we have been looking at how organisations can undertake, commission and interpret qualitative evaluation methods. We set out to find a great answer to the question: how can both evaluation novices and evaluation experts conduct great qualitative evaluation to assess the impact of their wellbeing interventions?

With a focus on wellbeing, we’ve explored what guidance already exists and identified the gaps. Our report, Quality in Qual: A framework to commission, judge and generate qualitative evaluation in wellbeing impacts, is a new framework of prompts for thinking about qualitative evaluation.

What we did

To help us find an answer, we were privileged to interview an outstanding group of experts who generously shared their deep methodological expertise. We selected ten experts who specialised in qualitative evaluation, and deliberately included those who focus more on quantitative and economic approaches. Alongside these interviews, we undertook desk research and put out a call for evidence to help identify the existing guidance on qualitative evaluation methods.

What we found

It was striking that there is no shortage of guidance. We found fifteen items of structured guidance, including frameworks, toolkits and checklists. This does not include textbooks or ‘how to’ method guides on qualitative research evaluation, of which there are countless examples. And it only includes free-to-access resources. The volume of advice can be overwhelming, and much of it is based in health research.

Our interviews identified the need for a navigation tool, potentially akin to the Alliance for Useful Evidence’s Experimenters Inventory, which would provide more detail on qualitative evaluation methods, their pros and cons, and when to use them. There was also an appetite to explore a mixed-methods Standards of Evidence, rather than develop guidance specifically on qualitative evaluation methods. It was widely claimed that quantitative or qualitative evaluation alone cannot get to the question of impact.

Developing a new framework

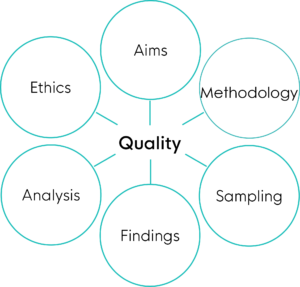

Drawing on these insights, our report proposes that there are six ‘prompts’ to qualitative evaluation. These six areas are intended to guide those commissioning, conducting or critically appraising qualitative evaluation. However, we want to emphasise that this framework is a draft and intended as a prompt for discussion.

The Six Prompts for Qualitative Evaluation

Development and user testing

This list of prompts could have been much longer than six, but we wanted to keep our framework simple and user-friendly. Our initial research identified a value in having a framework to help navigate qualitative evaluation methods. But it was also clear that a framework is not enough. Alongside guidance, there needs to be skills, training and capability-building as well.

Before expanding the framework and analysing what additional support is required to ensure it is useful and usable, we would like to test the framework.

Get involved

We are keen to hear from those who conduct, commission or use qualitative evaluation to understand what challenges they face, and where useful guidance already exists. We would be delighted to hear your feedback on the framework. Does it work for you? What needs to change? We’d also love to hear any other thoughts and comments.

Please share your thoughts with info@whatworkswellbeing.org.